Data Recording: It’s Simple, Except That It’s Hard

Historical data analysis is especially crucial for regulatory compliance and best execution requirements; however, it also allows firms to learn from the past and innovate for the future. Without accurate and reliable historical data on hand, firms can’t backtest new strategies or comply with regulations like Reg NMS, Reg SCI, or MiFID II in Europe.

Despite paying for pricey data feeds a number of firms don’t collect and record their own data, citing cleaning and storing data as expensive and time consuming. Refinitiv’s research shows that for every $1 spent on market data another $8 is spent on getting that data prepped for analysis.

However, buying third-party historical data often isn’t the best option either; many vendors require a minimum purchase of data which becomes an unnecessary expense as some use cases for historical data can run as briefly as only one day. Not to mention, best execution analysis requires records from a firm’s individual infrastructure, including a timestamped record of their unique view of market data.

The best option for a lot of firms is to buy managed solutions for data collection, management, and replay. This allows in-house teams to focus on analysis and regulatory compliance. The Exegy Capture Replay (XCR) appliance offers just that with a fully managed appliance that captures, stores, and delivers market data in a variety of formats. In today’s competitive trading environment, flawless execution and swift time to market for strategy updates are crucial—offloading data management might be the next best move for firms.

Data Capture: Vigilance Required

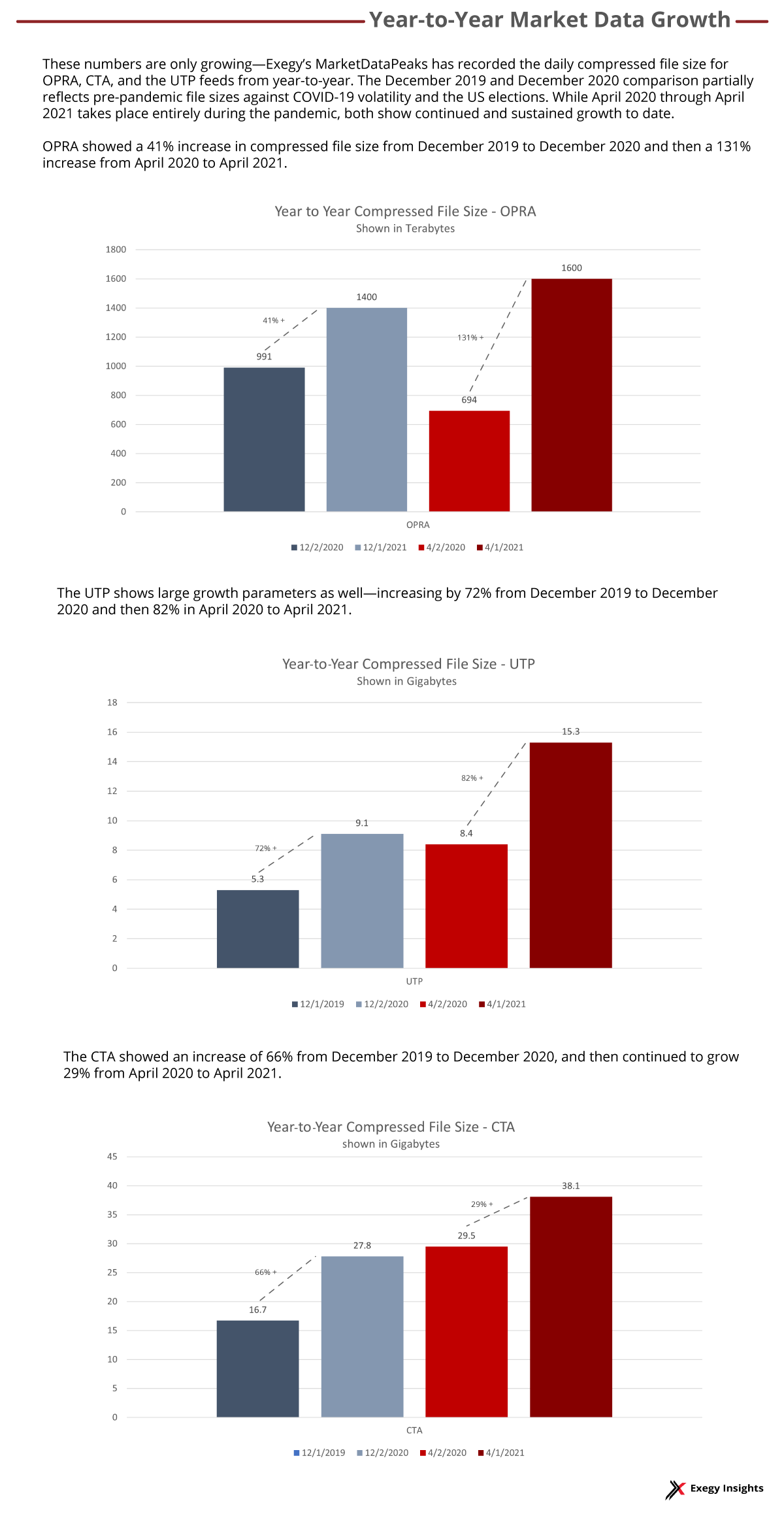

While data capture might seem simple in theory, it’s much harder in practice when scaled to the volume of market data that most firms consume. The Options Price Reporting Authority (OPRA) consolidated feed for US options alone produces about 20TB of data during a single market session, about 10 times the data that North American Equities and Commodities produces. This amount of data makes reliable capture, formatting, and retrieval more difficult.

*Data Compression Ratio remained consistent at 20:1 for all figures.

Dropping data is never good, but can happen when systems run at or over capacity—a likely scenario for firms not keeping up with growing data volumes. One mitigating solution is buffering—essentially allowing packets to queue while processing and storing catches up. Buffers need to be large and strategically placed or they run the risk of dropping captured data before it is saved to persistent storage. A managed service that constantly monitors and configures buffers, ensuring they’re placed correctly with sufficient capacity, can make the difference between a complete data archive and “Swiss cheese.”

Data from most exchanges is delivered in real-time using best-effort network protocols that do not guarantee delivery. To mitigate the risk of data loss, exchanges transmit redundant copies of feeds that allow recipients to arbitrate, or fill in missing packets from the redundant feed. Performing this arbitration function and presenting a complete and correctly sequenced recording of data to applications is a key requirement of data capture solutions. A managed service that monitors arbitration performance and adjusts buffers to maximize its effectiveness is a first line of defense for preventing gaps in data capture.

Common Formats of Market Data

Once captured, data can be stored in a variety of formats. Raw packet capture (PCAP) format is typically used to store data for long-term archival purposes, as it is the original format that is sent by the exchange. Storing PCAP files ensures that not only is all market data stored, but so is low-level network data describing the delivery of the market data. This includes valuable timestamps for when data was sent or received—the first step to understanding any systems latency. Raw data also reveals how messages are bundled into packets and helps identify which packets were lost or delayed.

Another popular format is Comma Separated Value (CSV) which is commonly used in everything from Excel spreadsheets to complex machine learning applications written in scripting languages like Python. While CSV formats can vary slightly, its core principles are the same: separating plain, readable data with commas making it simple to work with. Exegy’s Signum team uses CSV format to store all data for their machine learning applications and predictive signals.

Application Programming Interfaces (APIs) allow applications to access exactly the data they need while abstracting away variations in delivery formats. The Exegy Client API (XCAPI) is an example of an API for normalized market data that provides consistency across all feeds, markets, and asset classes. This consistency allows users to easily incorporate new feeds or new markets into their applications, without the overhead of learning a new format or data model. XCAPI also delivers fine-grained timestamp data and allows users to define three composite views of pricing across a bespoke set of markets:

- User-Defined Best Bid and Offer (UBBO): a single pan-market best bid and offer view across a user-defined exchange set. This can provide clients with a faster and more accurate view of the NBBO than the SIP.

- User-Defined Quote Montage (UQM) a montage view of BBOs across multiple markets that provides breadth.

- User-Defined Composite Price Book (UCPB) a view of bid and ask spreads across multiple user-defined exchanges.

Backtesting for Application Development

Many use cases for historical market data require replay capabilities. Examples include backtesting new strategies, developing production trading applications, facilitating off-hours development, continuous integration testing, and application performance optimization.

All these processes are crucial for professional traders and require the ability to recreate various market conditions. The ability to play back data at precise rates, including multiples of the recorded rate, is essential for effective performance testing. Measuring the speed, scalability, and overall responsiveness of new applications ensures trading systems minimize operational risks while maximizing returns.

Backtesting a new strategy typically requires fine-tuning parameters while testing with the same data over and over. This is a repetitive but time-sensitive endeavor; signals and alpha opportunities don’t last forever. Tools that enable traders to automate backtests by specifying market data replay scenarios under program control can help firms capture more opportunities. These tools also can be used to test new automated trading applications in a near real-life environments to minimize operational risks prior to deployment. Being able to playback any amount of data from any time span via the same API that applications will use in real-time trading allows development to occur after the markets have closed and facilitates automated testing and continuous integration. The Exegy Capture Replay (XCR) appliance uniquely provides this set of tools for historical market data, ranging from top-of-book (Level 1) feeds to full-depth market-by-order (Level 3) feeds.

Regulatory Requirements

In addition to using replay for testing, replay is also necessary for complying with various regulations in the US and Europe. For US participants Rule 611 or the order protection rule of Regulation NMS requires that agency brokers demonstrate that customers’ orders were filled at prices equal to or better than the National Best Bid and Offer (NBBO). This makes it crucial for brokers to record their own data just as it comes into their systems in order to analyze compliance.

Exchanges and the largest Alternative Trading System (ATS) venues answer to the regulatory requirements of Rule 1001(a) of Regulation SCI, which states that the resiliency and capacity of their systems must be tested by replaying historical market data at a multiple of historical peak rates.

Similar requirements apply to European participants under Article 48 of MiFID II which mandates participants must be able to execute trades in unexpected circumstances like “severe market stress.” Article 48 sets a number of requirements for electronic trading and infrastructure capability that would need to be tested by a product capable of simulating advanced market conditions. Exegy’s XCR can test infrastructure at over 2.5x the normal market data rate.

The Exegy Capture Replay (XCR)

The challenges that come with capturing, storing, managing, and utilizing large data banks can drain resources and be extremely error-prone. Yet, it’s a vital process that allows the discovery of new strategies for generating alpha. Offloading this task can increase efficiency by ensuring that research and development teams are getting the data they need in the formats they need, subsequently reducing the time it takes to innovate.

Exegy’s Capture Replay is a fully featured appliance that allows firms to reduce the time, cost, and staff required to meet their historical market data requirements. It also drives the Exegy Historical Data Service that is trusted by FINRA for the Consolidated Audit Trail (CAT) for US equities and options. Firms using the XCR can be confident that it is capable of addressing some of the most demanding use cases in capital markets.

To learn more about how Exegy can help your firm handle its historical market data needs, request a free consultation with our market data experts.