AI and Machine Learning for Predictive Trading — Part 2

The first part of this blog series details the evolution of high-speed trading and how having reached low latency the industry is turning towards predictive trading.

In the era of data driven trading, understanding how different players in the market use machine learning can lend insight to firms beginning to implement trading strategies driven by predictive signals.

Machine learning signals for mining alpha or optimizing execution algorithms can be developed in-house or purchased from providers, or both. The decision process starts with understanding the breadth of signals machine learning technology can offer and planning an informed route to implementation.

The Wide World of Market Indicators

Predictive trading signals can estimate a wide range of market parameters including the direction of price movements, the magnitude of that movement, when the price will move, and how much liquidity will become available when it does.

This plethora of possible signals can be applied to strategies with various holding periods from next tick to next quarter. For example, market-makers use signals to improve latency arbitrage strategies that require next tick indicators. Training a machine learning model that can predict the timing and direction of price movements requires historical market data from multiple venues. Once the training process is complete real-time feeds from those same venues are needed as input data.

Strategies with longer hold periods can use different types of data to bolster their insights. Predicting price movements over the next three quarters could require end-of-day data, political and corporate news data, or qualitative data for fundamental analysis on individual companies.

Buy-side firms with strategies involving alpha cloning could use end-of-day data and machine learning algorithms to reveal major position changes by institutional investors faster than using regulatory information, such as 13-F and Form 4 filings.

Alternative data can be used widely with many strategies for both long and short holding periods. For example, industry specific approaches like using credit card transaction history to predict consumer spending for the quarter or climate information as an indicator of potential electricity use by household per month.

Normalizing, Labeling, and Interpreting Data

No matter which strategy is employed, all machine learning datasets need to be normalized, interpreted, or labelled (for supervised learning). These factors affect the time it takes to develop a signal and the resources needed to complete the project.

Normalization and data cleaning—such as min-max scaling—is a must do process for algorithm generalization that firms will already be familiar with. However, it does not always end there. Algos that use alternative data like those that employ Natural Language Processing (NLP) to data-mine news and press releases require extra steps for interpretation and application. NLP technology must be trained to recognize colloquial language and human sentiment before it can be used as a signal.

Image-based data, like an overhead view of a parking lot, may require applications to first learn to recognize cars and count them. Then, the correlation between these metrics and their effect on market data needs to be mapped out. For example, there would have to be a clear connection between the number of cars in a parking lot and the financial performance of the businesses within that lot for it to be applicable.

Even using well-structured market data has its challenges. All data used to train supervised learning models must first be labeled. This can be a labor-intensive task; 96% of companies that have started machine learning projects run into labeling issues and many end up outsourcing that stage of development.

Supervised and Unsupervised Learning

Based on what is being predicted and at what time horizon, experts will be able to determine the data that needs to be sourced and the kind of machine learning method required to train the model.

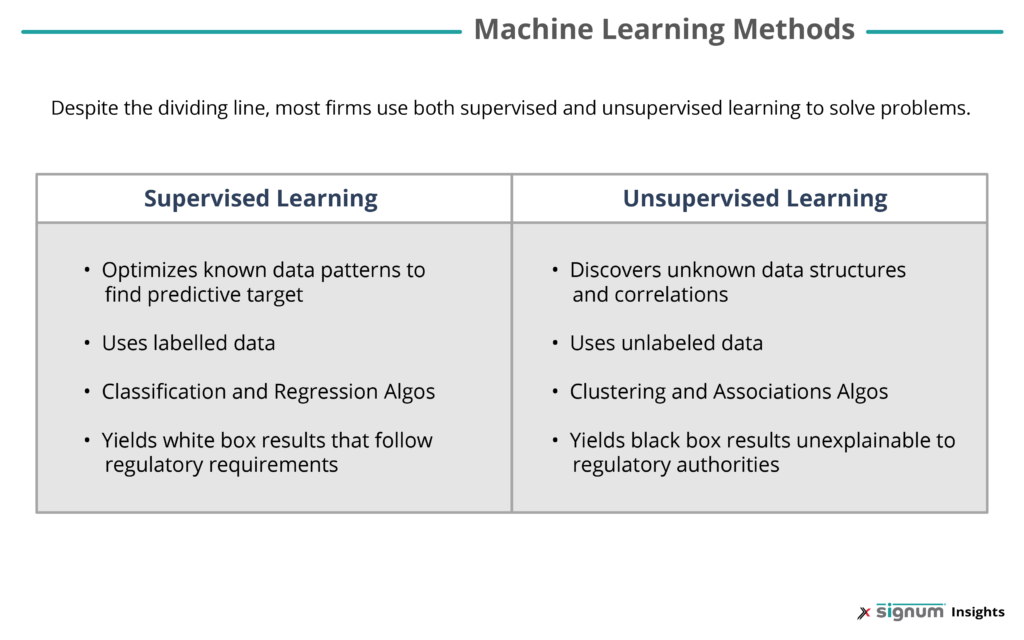

There are multiple methods of machine learning, but the two most associated with financial applications are supervised and unsupervised learning.

The supervised learning method of machine learning can read normalized, labeled data and make future predictions based on past classification or regression modeling. For example, JP Morgan’s AI solution for FX is called DNA (Deep Neural Network for Algo Execution) and is capable of advising clients on best execution and order routing based on historical trade data.

Unsupervised learning is a more exploratory approach. This model is fed unlabeled data and asked to categorize or cluster it to discover unknown structure. This method requires much more data to learn and is more commonly associated with alternative data in popular media, but both supervised and unsupervised learning are just as likely to use alternative data. It is good to remember that these two learning methods are not black and white. Often companies will employ both methods to solve problems.

Knowing what kind of machine learning technology and which datasets need to be sourced will help firms determine the kind of resources they need to implement a signal—most importantly, whether to develop that signal in-house or vend it from a third-party provider.

In-House Development

According to Forbes, 98.8% of firms are investing in AI as of 2020, but only 14.6% have put products into the market. These numbers show a large demand with a minimal amount of competition from other firms, building a strong case for the potential profit of in-house development.

This demand likely will not cease. Industry experts warn that as predictive trading signals are identified and used by more and more companies, they will lose their edge. This is called alpha bleed or alpha decay and operates off the fact that a lot of aspects of trading can be a zero-sum game; as the number of firms accessing the same source of alpha from one signal increase, the amount of alpha captured by each firm decreases (on average).

The best way to combat this is to continually develop predictive trading signals to capture new sources of alpha and to constantly tweak existing ones to capture larger shares of known alphas. Another way to combat this is to build more robust models that are less likely to lose alpha over time.

The industry-wide demand for predictive trading signals has translated into demand for computer scientists with AI expertise, who are becoming harder to find and more expensive to hire. According to BNY Mellon, the number of workers trained to use AI amounts to only a few thousand. Despite that expense, the sell-side giant Goldman Sachs has found that one computer scientist is worth four traders in terms of productivity.

While the long game might be worth it to some, there are a lot of risks associated with developing signals in-house. It can be extremely time and resource consuming, with no sure-fire guarantee of success. Besides data scientists with machine learning expertise and computer scientists with trading systems experience, firms will need to hire domain experts that can connect financial data to specific use cases.

The more complex the signal the more resources, time and risk are associated with it. Fortunately, an option to buy predictive signals has emerged from pioneering vendors with the vision and technical expertise to meet industry demand. Exegy built Signum—a suite of trading signals—by recruiting a team of data scientists and integrating them with their experienced team of engineers and domain experts.

Third Party Solutions

Now, Exegy sells subscriptions to its suite of signals for a price equal to the average salary of two data scientists, making it a viable third-party option for risk-averse firms. Economy of scale allows companies like Exegy to amortize development costs over a multitude of firms. Firms that choose to use vendor solutions save money and can get to market quicker; solutions can be implemented in weeks as opposed to the years that go into internal development processes.

Successful signal vendors must provide sufficient flexibility and customization to users to maximize the number of use cases and to avoid risks of alpha decay. Exegy has a suite of signals, including two with a threshold firing system, Quote Fuse and Quote Vector, that predict when price movements will occur and what direction they’ll go in. Our third signal, Liquidity Lamp, can predict how much liquidity will become available when these movements occur. Clients can set different thresholds giving them the ability to control the level of accuracy they desire based on the amount of risk their strategy will tolerate. This allows users to tune the tradeoff between signal accuracy and frequency. The strategies with high accuracy thresholds to guard against risk will fire off less often. Whereas strategies with lower accuracy thresholds will take on more risk but have more frequent opportunities to use the predictions.

As a signal provider, Exegy maintains the efficiency and accuracy of its suite of signals-as-a-service by reassessing daily and adjusting when necessary. With a team of experienced, knowledgeable data scientists and developers, Exegy can help firms leverage predictive signals no matter what step of the process they are in. For firms creating their own signals, using Signum can quickly establish a foundation and allow proprietary efforts to focus on integration and optimization. For more information on Signum or how Exegy can help, contact us.

Want to learn more about Signum signals? See how they can be used to build alpha cloning strategies and improve execution.